How to Measure Impact with the Kirkpatrick Evaluation Model

Are you sure that your L&D programs are actually hitting the business mark?

Originally outlined in 1959, the Kirkpatrick Evaluation Model is still the most widely used method of training evaluation today.

It’s been updated in the decades since to reflect a new world of work and way of learning, which begs the questions: What is it, how has it changed, and how can it benefit L&D in modern organisations? We cover it all in this guide. Let’s dive in.

What is the Kirkpatrick Evaluation Model?

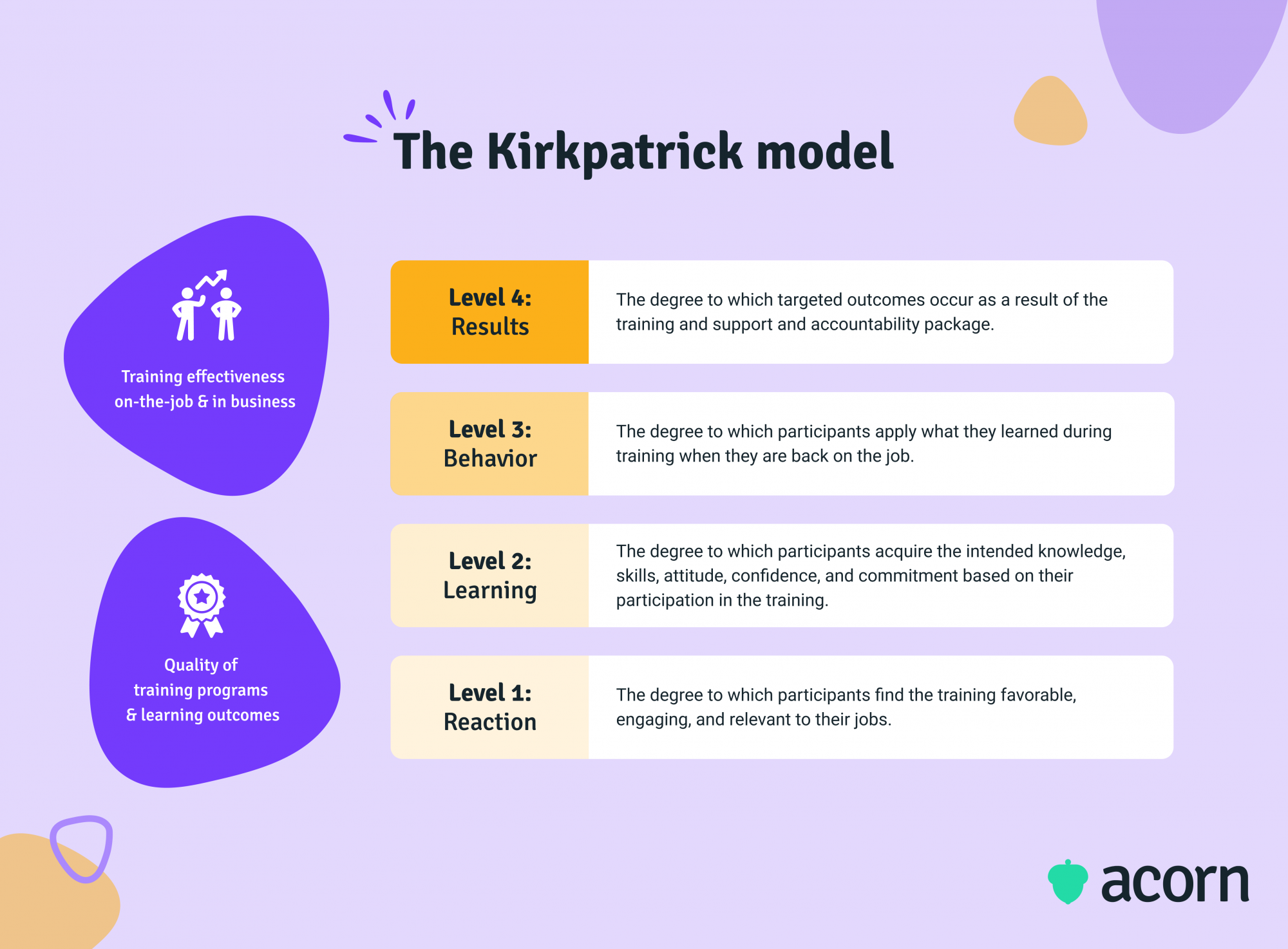

The Kirkpatrick Model is a popular method for analysing and evaluating the efficacy of a training program at different stages of the learning journey. It uses four successive criteria (or levels) for evaluation: Reaction, learning, behaviour, and results.

When to use a training evaluation model

There are plenty of reasons for implementing a more structured approach to L&D. It could be:

- Learning outcomes aren’t solving business pain points

- Training efforts aren’t impactful on employees’ day-to-day

- Proposed learning isn’t aligned with business strategy

- The organisational value of L&D is hard to convey

- Visibility across L&D stages is segmented between functions, or housed solely in L&D.

Ultimately, there’s no right or wrong reason to implement a training evaluation model. As training evaluation models are frameworks for understanding learning efficacy, your focus simply needs to be improving training and evaluation itself.

What are the four levels of training evaluation?

Think of Kirkpatrick’s four levels of training evaluation as a sequence. Each is important, not least for its impact on the succeeding level. As you move through the sequence, the process becomes more complex in pursuit of more comprehensive information.

NB: You can’t skip a level to get to another. It’s a flow-on effect. And all these steps can only occur when employees have completed a program.

Level 1: Reaction

Level 1 is about determining how learners responded to a training program.

Original Kirkpatrick model

The first level of evaluation looks at engagement and experience. It’s considered the most superficial level as it focuses on metrics such as course completions, progress rates, and satisfaction with the mode of learning.

Notably, the original Kirkpatrick model puts emphasis not only on simply gathering reactions to training, but positive reactions. The reasoning: Positive reactions may not guarantee learning, but a negative reaction will hinder it even occurring.

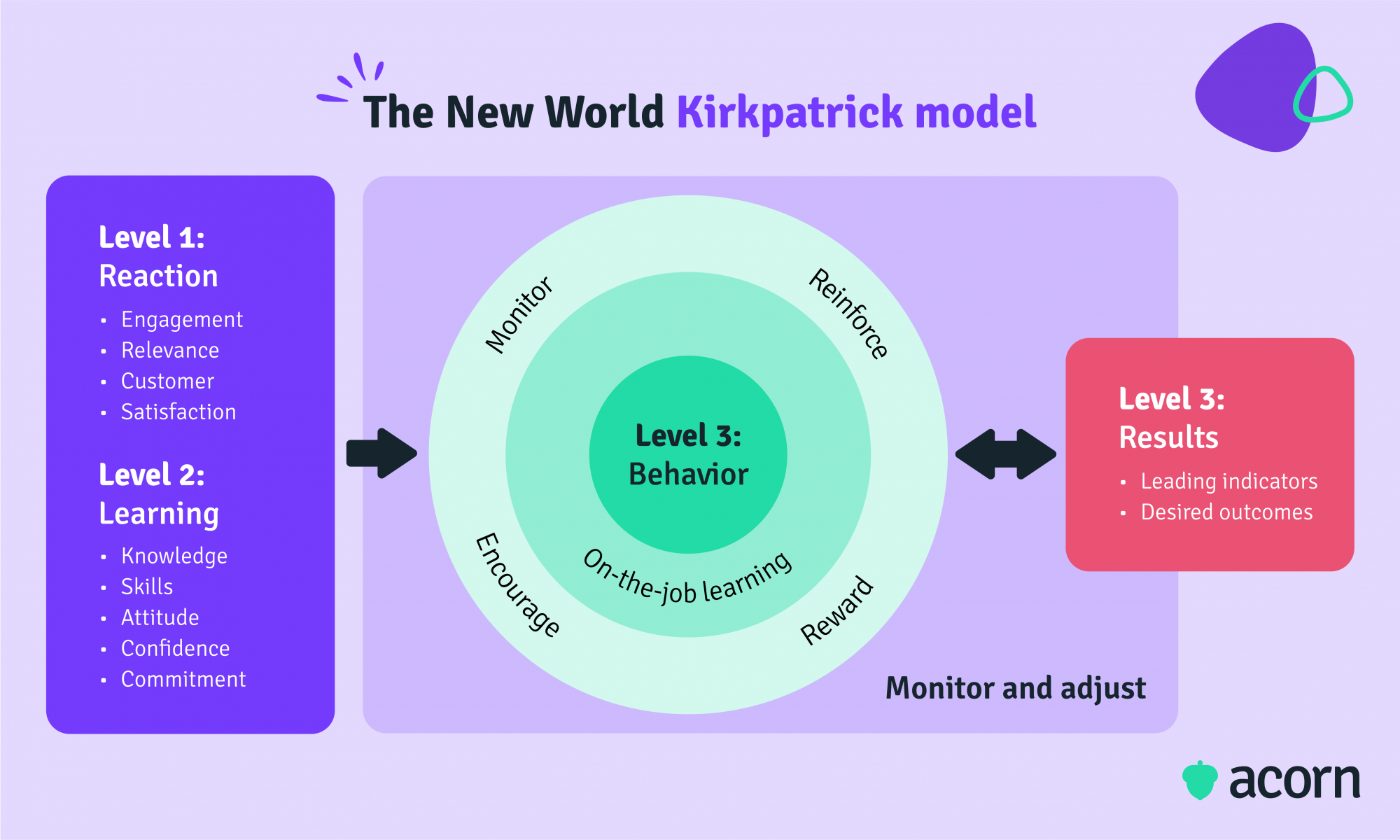

New world Kirkpatrick model

The updated view is that too many resources are devoted to this level of evaluation, considering it analyses data that is least likely to impact business or behavioural changes—though still has its place as it respects the learner’s view.

Alongside satisfaction and engagement, the new world Kirkpatrick model introduces behavioural indicators. It asks you to consider the relevance of learning, i.e. are learners going to be able to use this information post-training?

Insights gained

- Missing or lacking topics

- User experience of supporting technology

- Personal responsibility

- Accommodation of learning styles

- Support needed for skills application.

Level 2: Learning

Here, we look more closely at what was or wasn’t learned.

Original

Evaluation is linked to defined objectives. Learning in this sense is about the extent to which employees have learned and adopted new attitudes, skills and behaviours as a direct result of completing the program.

The old school model notes that there are three ways in which learning can take place: Attitudes change, knowledge increases, and skill improves. Some training will target all three, but at least two of these changes must occur for behaviour to truly evolve.

New world

Added to the assessment roster are confidence and commitment, to help bridge the jump between learning and behaviour. It’s important to understand how confident people are with what they’ve learned to limit a “cycle of waste” that can occur if they repeatedly undertake courses but fail to apply it on the job.

The new world model also characterises these desired changes with an illustrative phrase.

- Knowledge: “I know it.”

- Skill: “I can do it right now.”

- Attitude: “I believe this is valuable.”

- Confidence: “I think I can do this on the job.”

- Commitment: “I will do it on the job.”

Insights gained

- Capabilities developed/not developed

- Strength of objectives

- Commitment to learning

- Confidence in learnings.

Level 3: Behaviour

Level 3 delves into the influence of workplace culture on behavioural change.

Original

This is when KPIs are typically assessed against pre-training performance, meaning it’s the first point that we start to really look at business impacts. According to this Kirkpatrick model, the following must exist for true behavioural change:

- Culture that inspires a personal desire to change

- Training that successfully reinforces what to do and how to do it

- Managers who encourage skills application

- Intrinsic or extrinsic rewards are offered.

New world

Post-2010s, evaluation zooms in on the components of improved job performance.

- Critical behaviours: Specific, consistent daily actions that have a business impact.

- Required drivers: These are the processes and systems that reinforce, encourage and reward critical behaviours.

- On-the-job learning: Recognises that 70% of learning is experiential and personal motivation is equally impactful as external support for post-training enablement.

Insights gained

- Manager accountabilities

- Team culture (specifically vs organisational culture)

- Outdated processes

- Employee morale.

Level 4: Results

These are the impacts or outcomes that occurred because—and only because—an employee completed a training program and received subsequent reinforcement.

Original

You’re answering the question “How did training impact our business?” As behaviours can be seen as intangible rather than direct drivers of business outcomes, leading indicators and KPIs take charge here.

Think of the reasons for having a training program to begin with. Increased sales, decreased costs, reduced turnover, boosted profits are all tangible and cumulative objectives. If there is quantifiable change in one, was it because of a sustained behavioural change that itself was the result of training?

New world

A common misapplication from the new Kirkpatrick model perspective is taking too personal a view of results. Silos occur when results are segmented by teams or departments. The ensuing misalignment acts as a hurdle not only for training effectiveness, but true organisational effectiveness.

Still, training should start at this level. Defining desired results means you can more accurately define the behaviours that support those, and therefore the training needed to drive new behaviours.

Insights gained

- Return on investment (ROI)

- Business drivers

- Efficacy of analysis procedures.

How to use the Kirkpatrick model for evaluating training programs

The Kirkpatrick model is meant to get you thinking about desired results at every stage of the learning process, as well as your program evaluation methods. If you incorporate the new world approach into your L&D efforts, it’ll also push you to consider exactly what capabilities will deliver your desired outcomes before a training initiative has even been planned.

Why the old school way won’t serve you

In corporate L&D, ROI is often the key and the gatekeeper. Training professionals know strong, well-informed ROI can open the door for future L&D investments, but poor or no ROI can be all the reason decision-makers need to shut a program down.

Yet the difficulty of proving business impacts from specific training programs stops many organisations from even trying. In their 2020 Learning Measurement Study, Brandon Hall Group found less than 16% of organisations effectively identify and track metrics like business impact.

Few organisations get to Level 3 of Kirkpatrick evaluation, let alone Level 4 insights. Lack of resources can mean behavioural insights are obtained instead at reaction and learning levels. Yet, feedback from a training event or even learning itself can accurately predict skills application or changes in attitude, because they look at the past and present.

But it really fails because you don’t have a focus for your efforts when you start at reaction. And when you don’t have focus from the beginning, the links between levels become more tenuous while direct impacts are harder to prove. Ergo, we start at results.

Level 4: Define the results you want

This is the planning phase. Starting here means you’re not working on assumptions, instead giving parameters to otherwise intangible metrics. It’s then easier to define value for executives and stakeholders as you’re developing training initiatives from business drivers and positioning L&D as an agent of strategic change. It’ll also give employees—aka those who feel the everyday effects of learning—a tangible link between a training event and their jobs.

Involve business leaders at this stage to collaborate on the most targeted outcomes for training design. Sometimes the urgency to measure training exists solely within the learning function. A lack of wider interest can keep helpful resources and technology from where it needs to be. Part of defining key business stakeholders includes creating champions for learning (and its results) within your organisation.

If you’ve got a capability framework, now’s the time to look at your gaps. What are you missing entirely? What’s not working well? A capability focus will get you the most measurable results on two fronts: Business impact and operational performance.

Capabilities have the added benefit of being SMART (that’s specific, measurable, achievable, relevant and timely). They’re highly specific and therefore measurable, achievable since you’re likely already doing them or building towards them, relevant to both business strategy and employees’ day-to-day, and because of all this, timely.

Level 3: Determine performance indicators

This is the pivotal level of evaluation since we’re linking training and business through behaviours. At this stage, we want to be thinking about things that are not training but that can sustain improved performance.

Think of these questions as guiding stars for evaluating behaviour.

What is considered optimal performance for a job role?

What are the results individuals are expected to personally provide? Software developers might have a certain number of projects they must complete every month. What capabilities are they expected to possess? Line managers may need results-driven thinking, influence and communication. Score cards with the above provide standards and expectations for employees, managers and L&D teams alike.

What required drivers support learning application?

Think rewards. Implement ways in which behavioural changes are supported by peers, such as lunch and learns, and rewarded by management. If employees routinely step and utilise new capabilities of their own accord and you’ve got the budget, offer bonuses.

Consider the ways in which employees might integrate new skills at this level too, whether that’s a design side project or experiment in marketing—remember, personal motivation matters.

Who is responsible for post-training assessment?

Course completions won’t show you that behaviours have changes. This one’s on managers. Let’s not forget that this level evaluates manager accountabilities in the learning process.

Put it to them to:

- Schedule one-on-ones and performance reviews and provide job assignments that’ll test new capabilities.

- Monitor for day-to-day barriers to skills application in the culture.

- Co-design continual learning pathways and role model self-motivation.

Levels 2 & 1: Evaluate learning delivery & support

There’s research to suggest that the most optimal use of the new world Kirkpatrick model combines Levels 1 and 2. This is largely because:

- Neither Level 1 nor 2 can definitively predict Level 4 outcomes.

- The gap between engagement and learning isn’t big (employees who enjoy a program are more likely to internalise content, and vice versa).

- The jump from learning new skills (L2) to applying them as behavioural changes (L3) is big.

How then do we combine these two levels in a way that’s still meaningful? Consider what modes of training are needed both at the learning and application stages and how those bounce off one another.

They say if you don’t use a learned language, you’ll forget it. So, this stage is about continuing to pursue business results at the employee level. One way to do this is by creating learning programs that mimic the real-life business environment employees work in. In other words, develop and test capabilities in one.

This gets us thinking about the mode of training and the way in which it needs to be reinforced in the workplace. Say the overarching goal is to create a leadership pipeline to support your organisation in its growth phase. A supporting program could be a mix of eLearning courses in your learning management system (e.g., emotional intelligence, dispute resolution) and job shadowing with a mentor. Regular surveys, also through an LMS, give you employee sentiment while you’re also getting learning analytics from the system.

All this gives iterative evaluation methods for training effectiveness (as these levels look at) through:

- Types of training delivery that complement the intended knowledge being taught

- Tangible progression and engagement data from the LMS to support L&D function choices

- Verifiable feedback that can also be translated into visual data

- Opportunities for employees to apply capabilities as they’re learning them, which reinforces learnings with context

- Real-time evaluation and course correction thanks to mentorship, likely to decrease learner attrition.

Conclusion

Learning should operate in the same way as business does: Agile and focused on results. There’s a reason the Kirkpatrick model has been used since its conception in the 50s, even if it’s had a refresh. Starting with the business results you want to achieve from training means you can more acutely guide behavioural changes, workplace reinforcement and the capabilities you’re even developing in the program, all before training has even started.